1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

| import torch

import torchvision

from PIL import Image

from torchvision import transforms as T

import matplotlib.pyplot as plt

import cv2

COCO_INSTANCE_CATEGORY_NAMES = [

'__background__', 'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus',

'train', 'truck', 'boat', 'traffic light', 'fire hydrant', 'N/A', 'stop sign',

'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'N/A', 'backpack', 'umbrella', 'N/A', 'N/A',

'handbag', 'tie', 'suitcase', 'frisbee', 'skis', 'snowboard', 'sports ball',

'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard', 'tennis racket',

'bottle', 'N/A', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl',

'banana', 'apple', 'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza',

'donut', 'cake', 'chair', 'couch', 'potted plant', 'bed', 'N/A', 'dining table',

'N/A', 'N/A', 'toilet', 'N/A', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'N/A', 'book',

'clock', 'vase', 'scissors', 'teddy bear', 'hair drier', 'toothbrush'

]

model = torchvision.models.detection.fasterrcnn_resnet50_fpn(pretrained=True)

model.eval()

def get_prediction(img_path, threshold):

img = Image.open(img_path)

transform = T.Compose([T.ToTensor()])

img = transform(img)

pred = model([img])

pred_class = [COCO_INSTANCE_CATEGORY_NAMES[i] for i in list(pred[0]['labels'].numpy())]

pred_boxes = [[(i[0], i[1]), (i[2], i[3])] for i in list(pred[0]['boxes'].detach().numpy())]

pred_score = list(pred[0]['scores'].detach().numpy())

pred_t = [pred_score.index(x) for x in pred_score if x > threshold][-1]

pred_boxes = pred_boxes[:pred_t+1]

pred_class = pred_class[:pred_t+1]

return pred_boxes, pred_class

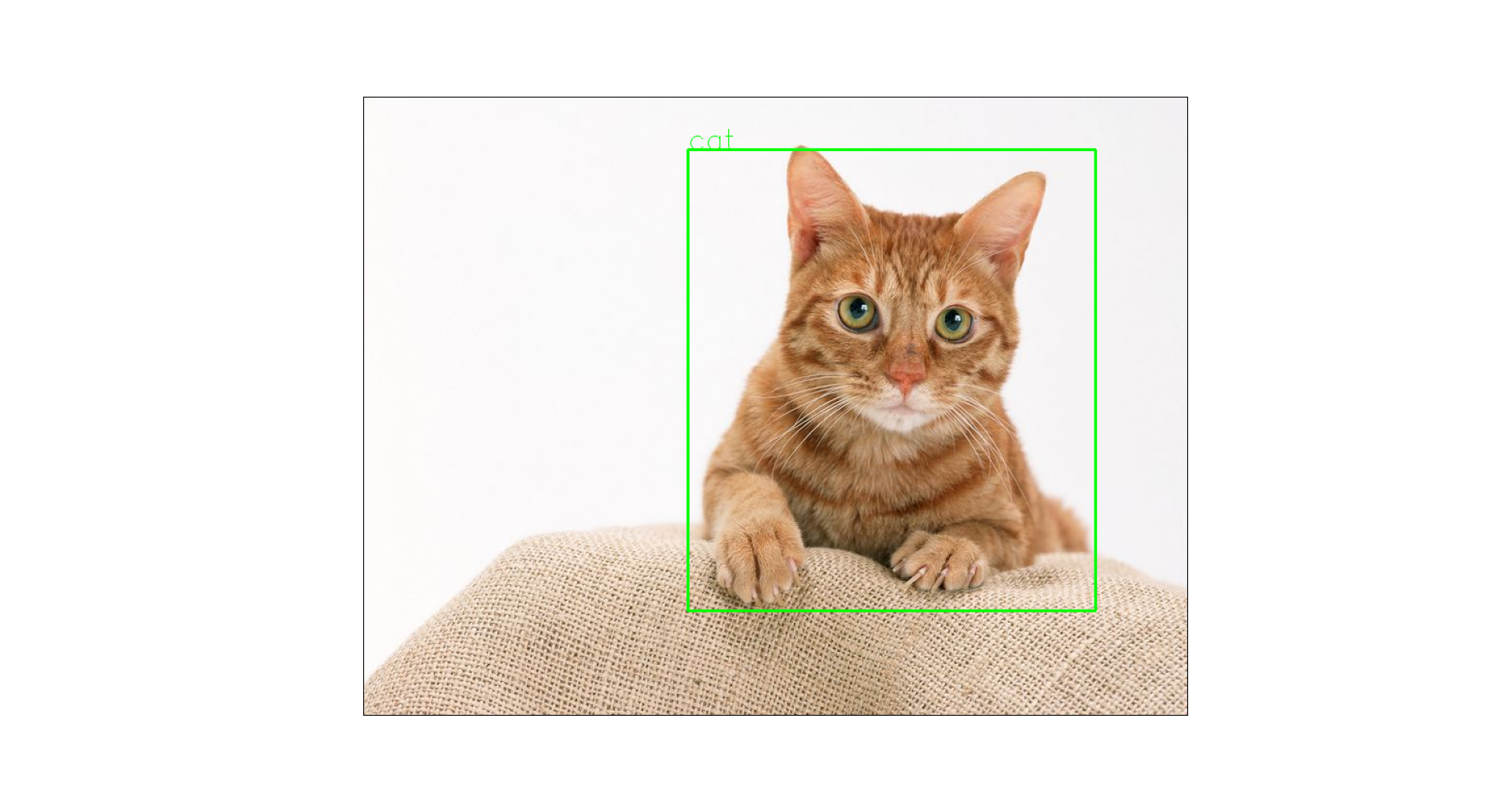

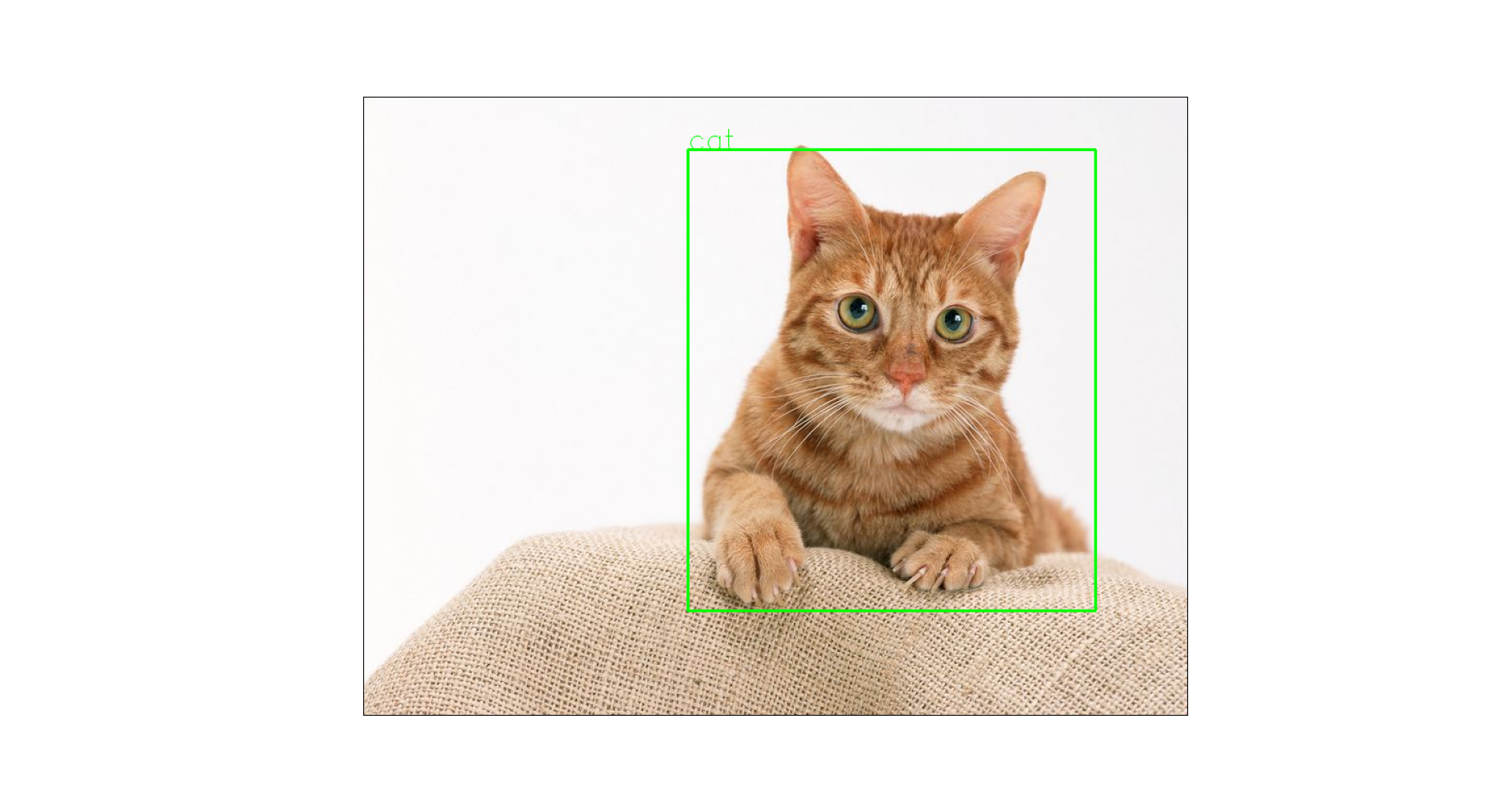

def object_detection_api(img_path, threshold=0.5, rect_th=3, text_size=3, text_th=3):

boxes, pred_cls = get_prediction(img_path, threshold)

img = cv2.imread(img_path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

for i in range(len(boxes)):

cv2.rectangle(img, (int(boxes[i][0][0]), int(boxes[i][0][1])), (int(boxes[i][1][0]), int(boxes[i][1][1])), color=(0, 255, 0), thickness=rect_th)

cv2.putText(img, pred_cls[i], (int(boxes[i][0][0]), int(boxes[i][0][1])), cv2.FONT_HERSHEY_SIMPLEX, text_size, (0, 255, 0), thickness=text_th)

plt.figure(figsize=(20, 30))

plt.imshow(img)

plt.xticks([])

plt.yticks([])

plt.show()

object_detection_api('timg.jpg', rect_th=2, text_th=1, text_size=1)

|